Introduction

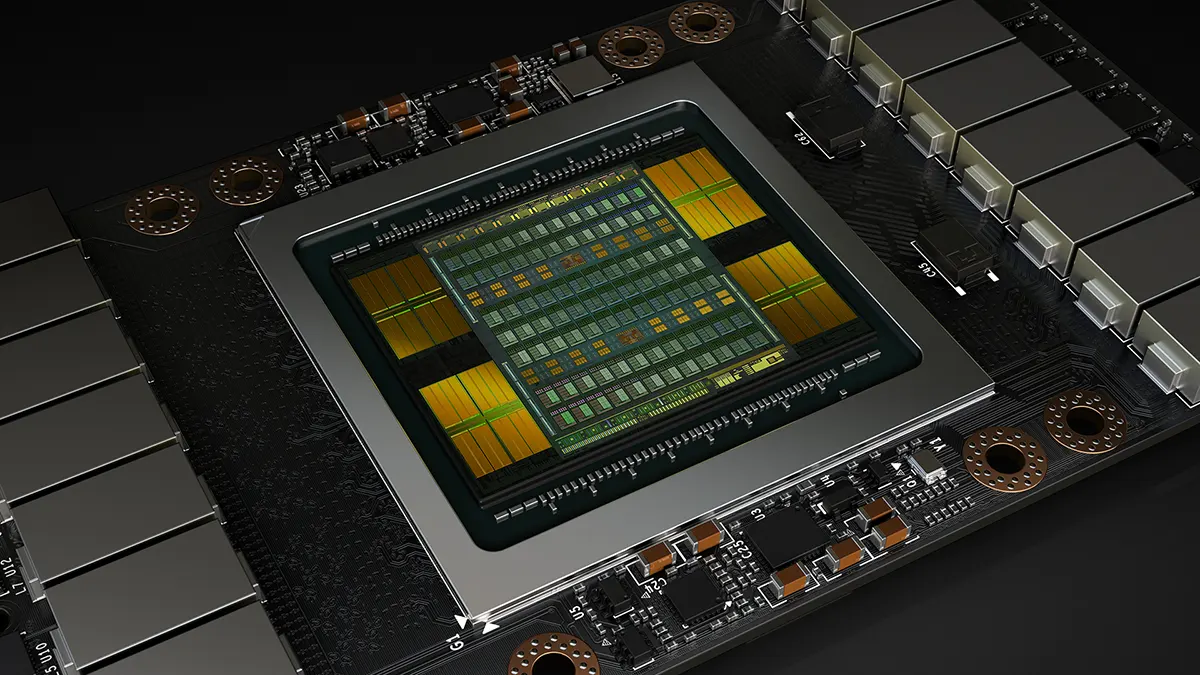

In the fast-paced world of AI, machine learning, and high-performance computing (HPC), NVIDIA GPUs stand as the cornerstone of modern computing. Known for their immense parallel processing power, NVIDIA graphics processing units (GPUs) are not only used for gaming but also play a crucial role in data centers, autonomous driving, deep learning, rendering, and scientific simulations.

Whether you're a developer looking for the best GPU to train neural networks or a system integrator designing the next AI supercomputer, understanding the main NVIDIA computing power GPU part numbers and parameters is essential.

This blog will break down NVIDIA’s top-tier compute-oriented GPUs, explain their naming conventions, delve into architecture, offer a detailed comparison, and help you identify which part number fits your workload best.

Understanding NVIDIA GPU Product Lines

Before diving into part numbers and specs, it's important to understand the primary product families in NVIDIA’s compute ecosystem:

1.1 NVIDIA Data Center and HPC GPUs

These are designed specifically for servers, scientific workloads, AI/ML training and inference.

• NVIDIA A100 (Ampere)

• NVIDIA H100 (Hopper)

• NVIDIA V100 (Volta)

• NVIDIA L40/L40S (Ada Lovelace, workstation-focused)

• NVIDIA RTX A6000 (Professional GPUs)

• NVIDIA T4 (Turing, inference-focused)

1.2 NVIDIA Professional Visualization GPUs

These include the Quadro RTX and RTX A-series, primarily for rendering, simulations, and data science.

1.3 NVIDIA GeForce RTX (Prosumer Level)

While built for gaming, high-end GeForce RTX 30 and 40 Series GPUs are often repurposed by developers and researchers for model training due to cost efficiency.

1.4 NVIDIA Jetson Modules

These embedded GPU modules (e.g., Jetson Orin, Jetson Xavier) are used in edge AI computing.

Decoding NVIDIA GPU Part Numbers

NVIDIA GPU part numbers follow a general pattern. For example:

• H100 SXM5 80GB:

1. H100: Hopper architecture

2. SXM5: Module format (used in servers)

3. 80GB: Memory capacity

Understanding these identifiers helps you distinguish between SKUs that might seem similar.

Comparison Table of NVIDIA Compute-Class GPUs

Below is a detailed comparison of the most powerful NVIDIA GPUs used for data center AI, ML, and HPC workloads:

| GPU Model | Architecture | CUDA Cores | Memory Type | Memory Size | Tensor Cores | FP32 TFLOPS | FP64 TFLOPS | Tensor TFLOPS | PCIe/SXM |

|---|---|---|---|---|---|---|---|---|---|

| NVIDIA H100 SXM5 | Hopper | 16896 | HBM3 | 80 GB | 528 | 51 | 30 | 2000 (Tensor Float 8) | SXM5 |

| NVIDIA H100 PCIe | Hopper | 14592 | HBM2e | 80 GB | 456 | 38 | 24 | 1000 (TF8) | PCIe |

| NVIDIA A100 80GB SXM4 | Ampere | 6912 | HBM2e | 80 GB | 432 | 19.5 | 9.7 | 312 (TF32) | SXM4 |

| NVIDIA A100 40GB PCIe | Ampere | 6912 | HBM2 | 40 GB | 432 | 19.5 | 9.7 | 156 (TF32) | PCIe |

| NVIDIA V100 32GB SXM2 | Volta | 5120 | HBM2 | 32 GB | 640 | 15.7 | 7.8 | 125 (FP16) | SXM2 |

| NVIDIA RTX A6000 | Ampere | 10752 | GDDR6 | 48 GB | 336 | 38.7 | 1.2 | 309 (TF32) | PCIe |

| NVIDIA L40 | Ada Lovelace | 18176 | GDDR6 | 48 GB | 568 | 91.6 | 2.9 | 1450 (TF8) | PCIe |

| NVIDIA T4 | Turing | 2560 | GDDR6 | 16 GB | 320 | 8.1 | 0.13 | 130 (FP16) | PCIe |

Top GPU Models and Use Cases

4.1 NVIDIA H100 (Hopper)

Use case: Large language models, transformer training, generative AI, supercomputing

Highlights:

• Supports TensorFloat-32 and FP8 precision

• 2x to 6x faster than A100 in AI workloads

• NVLink & NVSwitch integration for scale-out

4.2 NVIDIA A100 (Ampere)

Use case: AI/ML training, inference, data analytics

Highlights:

• Versatile across workloads

• Multi-instance GPU (MIG) for efficient resource usage

4.3 NVIDIA V100 (Volta)

Use case: Scientific computing, early deep learning

Highlights:

• Powerful FP64 performance

• Older architecture but still used in many HPC systems

4.4 NVIDIA RTX A6000

Use case: Workstation AI, rendering, simulation

Highlights:

• GDDR6 memory suitable for wide compatibility

• Can be deployed in desktops

4.5 NVIDIA L40/L40S

Use case: Enterprise AI, visualization, cloud gaming

Highlights:

• Ada Lovelace core with significant AI acceleration

• Targeted at AI inference and visual compute tasks

Key Metrics Explained

5.1 CUDA Cores

These are parallel processors responsible for general-purpose computing on the GPU.

5.2 Tensor Cores

Specialized units to accelerate matrix math — essential for deep learning.

5.3 Memory Bandwidth and Type

• HBM3/HBM2e: Ultra-high bandwidth, critical for deep learning

• GDDR6: Balanced for visualization and moderate AI workloads

5.4 Form Factor: PCIe vs SXM

• PCIe: Standard, easier to deploy

• SXM: Proprietary NVIDIA format for max power/performance, used in HGX systems

Section 6: Choosing the Right GPU Based on Workload

| Workload Type | Best NVIDIA GPU(s) | Why? |

|---|---|---|

| Training LLMs (e.g., GPT, BERT) | H100 SXM | Unmatched tensor performance, FP8 support |

| AI Inference at Scale | A100 PCIe / L40 | Efficient, scalable, cost-optimized |

| Rendering & Simulation | RTX A6000 / L40 | High FP32 + visualization compatibility |

| Scientific Computing | V100 / H100 | Strong FP64 support |

| Data Center General Use | A100 / T4 | Versatile, mature software support |

| Edge AI / Embedded | Jetson AGX Orin / Xavier | Low-power, small-form factor |

Emerging Trends in NVIDIA Compute GPUs

• FP8 and Mixed Precision Training: Enabled in H100, increasing efficiency in AI model training

• NVLink Switch Systems: Supporting massive GPU interconnects for supercomputing

• DGX and HGX Systems: Full-stack NVIDIA solutions with pre-integrated multi-GPU architecture

• AI Software Stack (CUDA, cuDNN, Triton): Key reason NVIDIA GPUs remain dominant in AI space

FAQ: NVIDIA Computing Power GPU

Q1: What’s the difference between NVIDIA H100 PCIe and SXM5?

A1: SXM5 versions support higher bandwidth and thermal limits, delivering greater performance. They are typically used in NVIDIA HGX servers. PCIe versions are more flexible and lower power.

Q2: Can GeForce RTX 4090 be used for AI/ML?

A2: Yes, GeForce RTX GPUs like the 4090 are used in research and development environments. However, they lack ECC memory and other enterprise features.

Q3: What is TensorFloat-32 (TF32)?

A3: TF32 is a data format used by NVIDIA for tensor operations. It provides better performance than FP32 while maintaining similar accuracy for many AI workloads.

Q4: Is A100 still relevant after H100 launch?

A4: Absolutely. The A100 remains widely deployed and supported. It is a cost-effective and capable option for many AI and scientific workloads.

Q5: Which GPU should I use for an AI startup?

A5: Start with A100 PCIe or RTX A6000 based on your budget and workload. For production-scale inference, T4 is also a good option.

Q6: What software is needed to utilize NVIDIA compute GPUs?

A6: Key tools include CUDA, cuDNN, TensorRT, Triton Inference Server, and frameworks like PyTorch, TensorFlow (all optimized for NVIDIA GPUs).

Conclusion

NVIDIA’s compute GPUs power the most advanced AI systems in the world — from ChatGPT and self-driving cars to protein folding and climate modeling. With architectures like Hopper (H100) and Ampere (A100) leading the way, understanding their part numbers and specs helps you make an informed choice for your computing needs.

Whether you're building a personal AI workstation, scaling inference in a data center, or training massive language models, there's an NVIDIA GPU tailored to your goals.

Written by Jack Zhang from AIChipLink.

AIChipLink, one of the fastest-growing global independent electronic component distributors in the world, offers millions of products from thousands of manufacturers, and many of our in-stock parts is available to ship same day.

We mainly source and distribute integrated circuit (IC) products of brands such as Broadcom, Microchip, Texas Instruments, Infineon, NXP, Analog Devices, Qualcomm, Intel, etc., which are widely used in communication & network, telecom, industrial control, new energy and automotive electronics.

Empowered by AI, Linked to the Future. Get started on AIChipLink.com and submit your RFQ online today!